Home » Help Center » Developer Metrics Encyclopedia: 70+ Stats for AI, Velocity, Debt, DORA, Issues and PRs - Defined & Illustrated

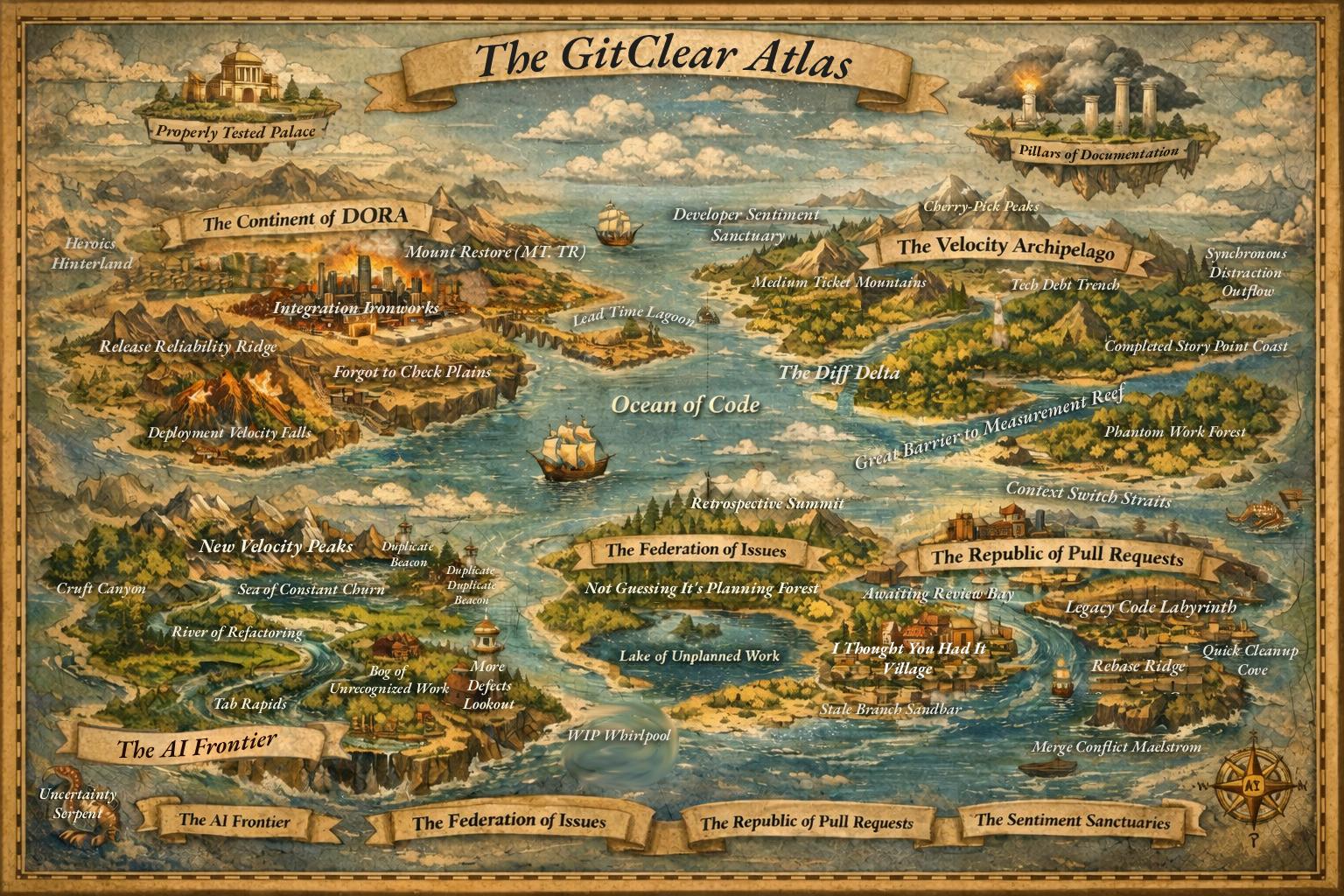

Developer Metrics Encyclopedia: 70+ Stats for AI, Velocity, Debt, DORA, Issues and PRs - Defined & Illustrated

By Bill Harding,

CEO/Programmer, GitClear

Last updated July 8, 2025